Debugging RAG Chatbots and AI Agents with Sessions

Have you ever wondered at which stage in the multi-step process does your AI model start hallucinating? Perhaps you’ve noticed consistent issues with a specific part of your AI agent workflow?

These are common questions developers face when building AI agents and Retrieval Augmented Generation (RAG) chatbots. Getting reliable responses and minimizing errors like hallucination is incredibly challenging without visibility into how users interact with your Large Language Model (LLM).

Addressing these pitfalls is crucial in creating robust and reliable AI agents and RAG chatbots. In this blog, we will delve into how to use Helicone’s Sessions feature to help you maintain context, reduce errors, and improve the overall performance of your LLM app.

What you will learn:

- AI agents and how they work

- Challenges of debugging AI agents

- Introduction to Sessions

- Using Sessions in Helicone

- How industries use Sessions to debug AI agents

First, let’s talk about AI agents. Please skip ahead to Using Sessions in Helicone if you are familiar with the concept.

What are AI agents?

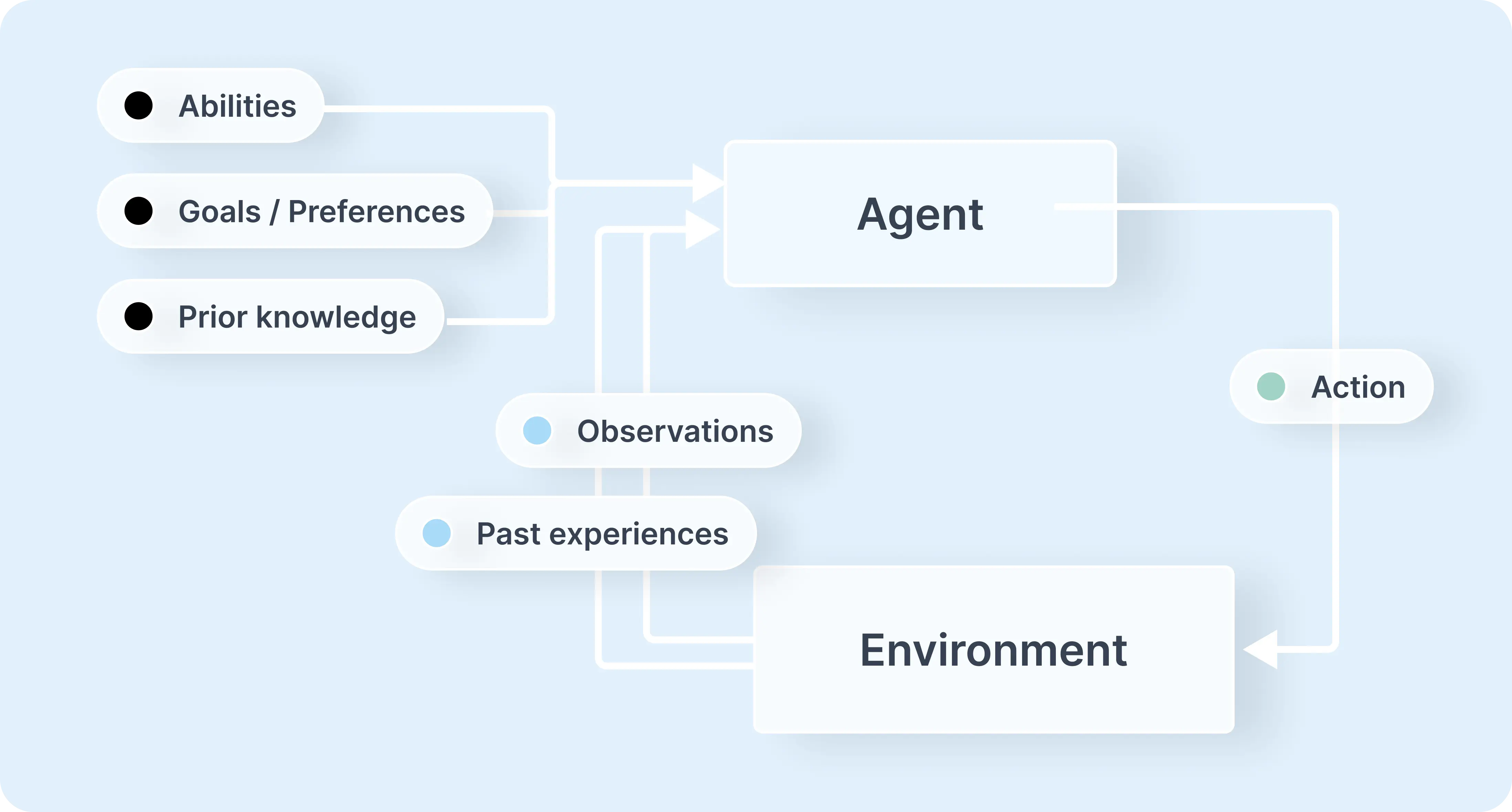

An AI agent is a software program that autonomously performs specific tasks using advanced decision-making abilities. It interacts with its environment by collecting data, processing it, and deciding on the best actions to achieve predefined goals.

For example, in a Contact Center, an AI agent can handle customer queries by asking contextually relevant questions to accurately identify issues, searching internal documents using techniques like semantic search and deliver effective solutions in real-time. When these AI agents encounter complex problems beyond their current capabilities, they escalate the case to a human agent smoothly to maintain high levels of customer satisfaction.

How do AI agents work?

AI agents differ from traditional software programs by autonomously performing tasks based on rational decision-making principles, guided by predefined goals or instructions. They simplify and automate complex tasks by following a structured workflow:

They simplify and automate complex tasks by following a structured workflow:

- Setting goals: AI agents are given specific goals from the user, which they break down into smaller actionable tasks.

- Acquiring data: they collect necessary information from their environment, such as data from physical sensors for robots or software inputs like customer queries for chatbots. They often access external sources on the internet or interact with other agents or models to gather data.

- Implementing the task: With the acquired data, AI agents methodically implement the tasks, they evaluate their progress and adjust as needed based on feedback and internal logs.

By analyzing this data, AI agents predict the optimal outcomes aligned with the preset goals and determine what actions to take next. For example, self-driving cars use sensor data to navigate obstacles effectively. This iterative process continues until the agent achieves the designated goal.

Challenges of Debugging AI agents

⚠️ Complex Decision Making

AI agents make decisions based on a multitude of inputs and from various data sources, including user interactions, environmental data, and internal states. Understanding how an agent processes and interprets this data requires deep insight into the algorithms and models. Agents may adapt their behavior over time through learning mechanisms, making their decision paths non-deterministic and harder to trace.

⚠️ Lack of Visibility into Internal States

Without proper tools, the internal workings of an AI agent can be a “black box,” with little visibility into how inputs are transformed into outputs. In systems where agents interact with multiple services or other agents, tracing the flow of information becomes even more challenging. In addition, traditional logging methods may not capture the necessary granularity of data needed to debug complex AI behaviors effectively.

⚠️ Contextual Debugging Challenges

AI agents often operate over extended interactions or sessions, where the context accumulates over time. Tracking the agent’s state throughout a session is essential for debugging since an error early in a session can have cascading effects, making it difficult to pinpoint the original source without comprehensive session data.

While understanding the internal workings of complex AI models is inherently challenging, using tools like Helicone’s Session significantly alleviate these difficulties.

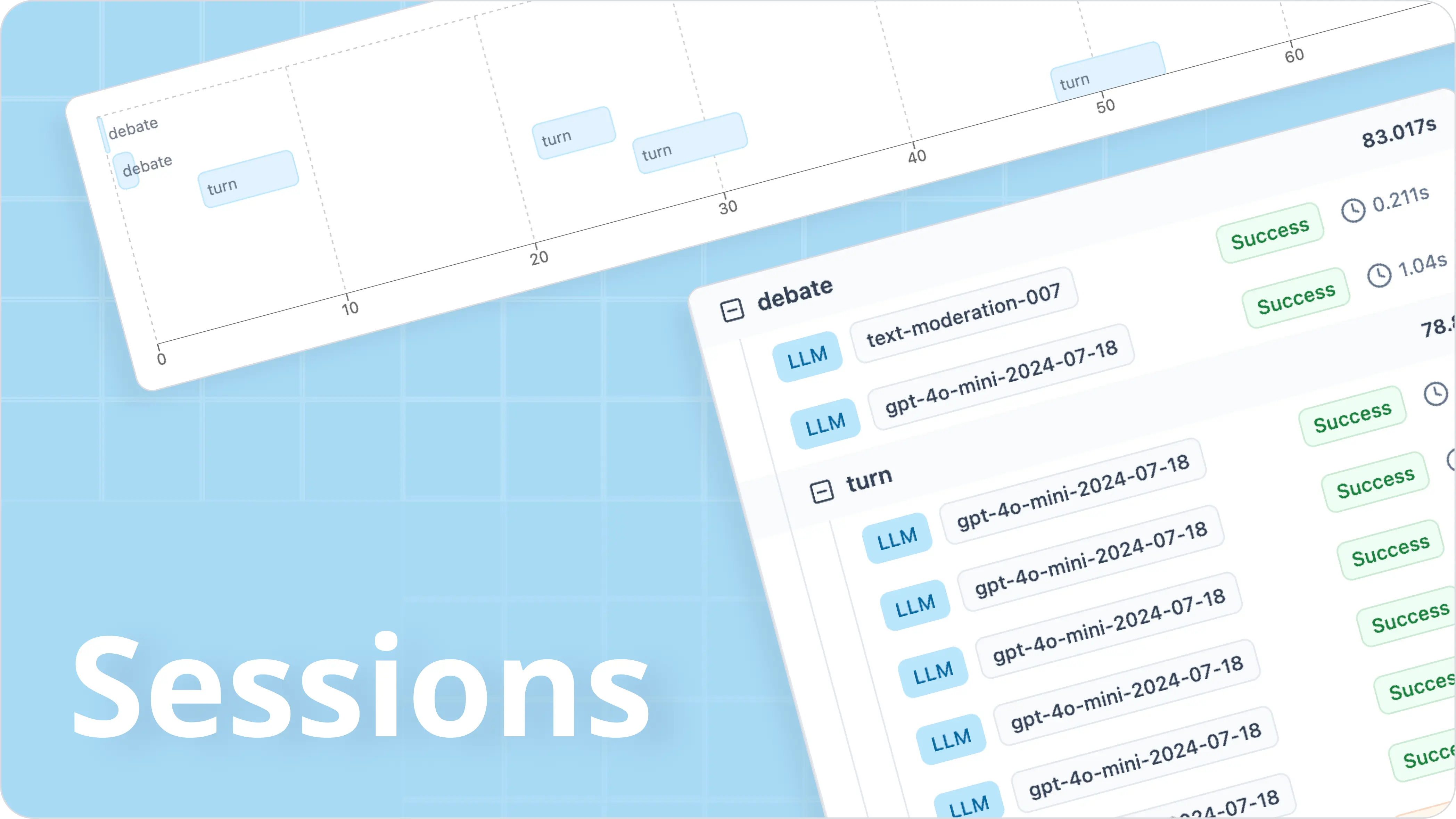

What are Sessions?

Sessions provide a simple way to organize and group related LLM calls, making it easier for developers to trace nested agent workflows and visualize interactions between the user and the AI chatbot or agent.

Instead of looking at isolated data points, sessions allow you to:

- see a comprehensive view of an entire conversation or interactive flow.

- drill down on specific LLM calls to view your agent’s flow of task execution.

- identify issues quicker given a better understanding of the context of errors.

- refine your chatbot’s responses based on specific contexts.

Using Sessions in Helicone

- Simply add

Helicone-Session-Idto start tracking your sessions. - Add

Helicone-Session-Pathto specific parent and child traces.

Here is an example in TypeScript:

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

baseURL: "https://oai.helicone.ai/v1",

defaultHeaders: {

"Helicone-Auth": `Bearer ${process.env.HELICONE_API_KEY}`,

},

});

const session = randomUUID();

openai.chat.completions.create(

{

messages: [

{

role: "user",

content: "Generate an abstract for a course on space.",

},

],

model: "gpt-4",

},

{

headers: {

"Helicone-Session-Id": session, // defines your session

"Helicone-Session-Path": "/abstract", // defines the order of LLM calls

},

}

);

How Industries Use Sessions to Debug AI Agents

Resolving Errors in Multi-Step Processes

Travel Industry

Challenge

In the travel industry, chatbots assist users through booking processes for flights, hotels, and car rentals. Errors can easily occur due to data parsing issues or integration problems with third-party services, leading to user frustration and incomplete bookings.

Solution

Sessions provide a complete trace of the booking interaction, allowing developers to pinpoint exactly where users encounter problems. If users frequently report missing flight confirmations, session traces can reveal whether the issue stems from input parsing errors or glitches with airline APIs, enabling targeted fixes.

Understanding User Intent for Personalization

Health and Fitness Industry

Challenge

Health and fitness chatbots must accurately interpret user queries to offer personalized workout plans and dietary advice. Misinterpretation leads to generic suggestions and decreased user satisfaction.

Solution

By analyzing session data, developers gain insights into user preferences and can adjust chatbot responses accordingly. If session logs show users often ask about strength training over cardio, developers can tweak the chatbot to provide more relevant strength training programs, enhancing personalization.

Improving Context Management

Virtual Assistants

Challenge

Virtual assistants like Siri and Alexa handle a wide range of queries and need to maintain context during interactions, especially when users switch topics abruptly.

Solution

Sessions track user interactions over time, revealing how the assistant manages context shifts and where improvements are needed. If assistants struggle when users change from setting a reminder to asking about the weather, session analysis can help developers refine context management algorithms for smoother conversations.

Are AI agents the future?

AI agents have an incredible ability to autonomously perform tasks and make decisions without needing human intervention. We’re already seeing AI agents in action across various fields like customer service, healthcare, education, legal work, and even autonomous driving.

However, for AI agents to truly succeed, we need to overcome some challenges. Ensuring ethical use, improving their reliability and accuracy, and addressing concerns about job displacement are all critical issues we must tackle. That’s why it’s more important than ever to adopt tools that monitor and enhance the visibility of AI agents and chatbot performance to make sure they are addressing user inquiries.